De-mystifying AI Agent Applications

Mai Lubega, a Senior Software Engineer turned Machine Learning Engineer at Deliveroo, shares her takeaways from a recent workshop on building AI agents. Discover why model size matters, how to manage conversation context effectively, and why an LLM is just an NPC until it gets an MCP.

LLMs, MCPs, RAG. There are lots of acronyms in the AI space, but what do they all mean? Dear reader, despite being a software engineer who works in the machine learning space, I confess there was a time I wasn’t really sure. Fortunately, with the financial support of Deliveroo’s Women-in-Tech Employee Resource Group, I took the Ardan Labs Building AI-Powered Applications in Go workshop that helped me understand what’s really going on behind the chat interface and where software engineering meets LLM-based applications.

We went through a series of modules to incrementally build a RAG (Retrieval-Augmented Generation) AI Agent application. I started with generating vector embeddings from text and eventually built an MCP Client & Server able to process image and text input and respond to simple queries, after which I felt I understood the new AI landscape much, much better. For my dearest gentle reader, I’m happy to share these three takeaways from the course:

Size Matters

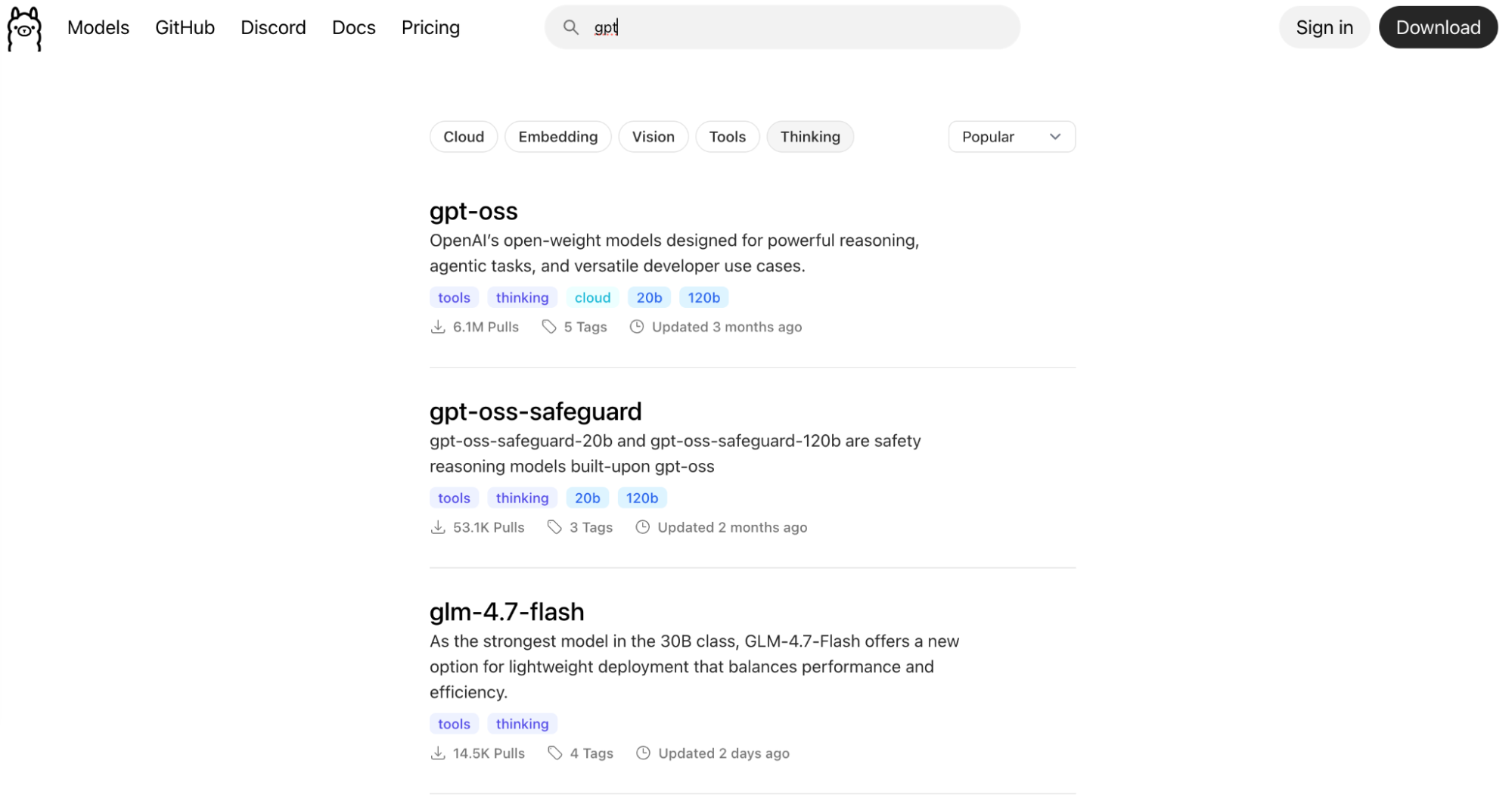

Full-size language models are very large and can require several hundred gigabytes of memory to run, certainly much more than is available on a personal laptop. To work around this constraint, we used Ollama — an open-source project that provides smaller, more size-efficient LLM models – that can be run locally. In the real world, some applications get around this problem by sending data directly to OpenAI/Anthropic’s APIs. They don’t have to host any models locally, just send and receive data (subject to usage tier and API limits, of course). But applications that need a model to run locally for proprietary reasons will have to manage the infrastructure themselves — which usually involves a very spicy cloud bill or a server in the corner that doubles as a space heater.

Don’t Lose the Plot (Manage your Context)

Think of Context as the LLM’s short-term memory. When we chat with an AI, we don’t just send the current question; we send the entire conversation history, system instructions, and any retrieved data content — collectively known as ‘The Context.’ As the conversation goes on, that memory gets crowded and expensive. Moreover, LLMs have a context limit, the maximum number of tokens the model can consider at once. The workshop discussed several clever techniques for managing this: using OpenAI’s TikToken to tokenize and monitor how much of our context window is being used, summarizing older parts of the conversation to save space, and removing the oldest messages once the limit is reached. While frameworks like LangChain and LlamaIndex help automate this, efficient memory management is crucial for building apps that don’t lose the plot halfway through a task.

LLMs alone are like NPCs (Non-Playable Characters) until they get MCPs

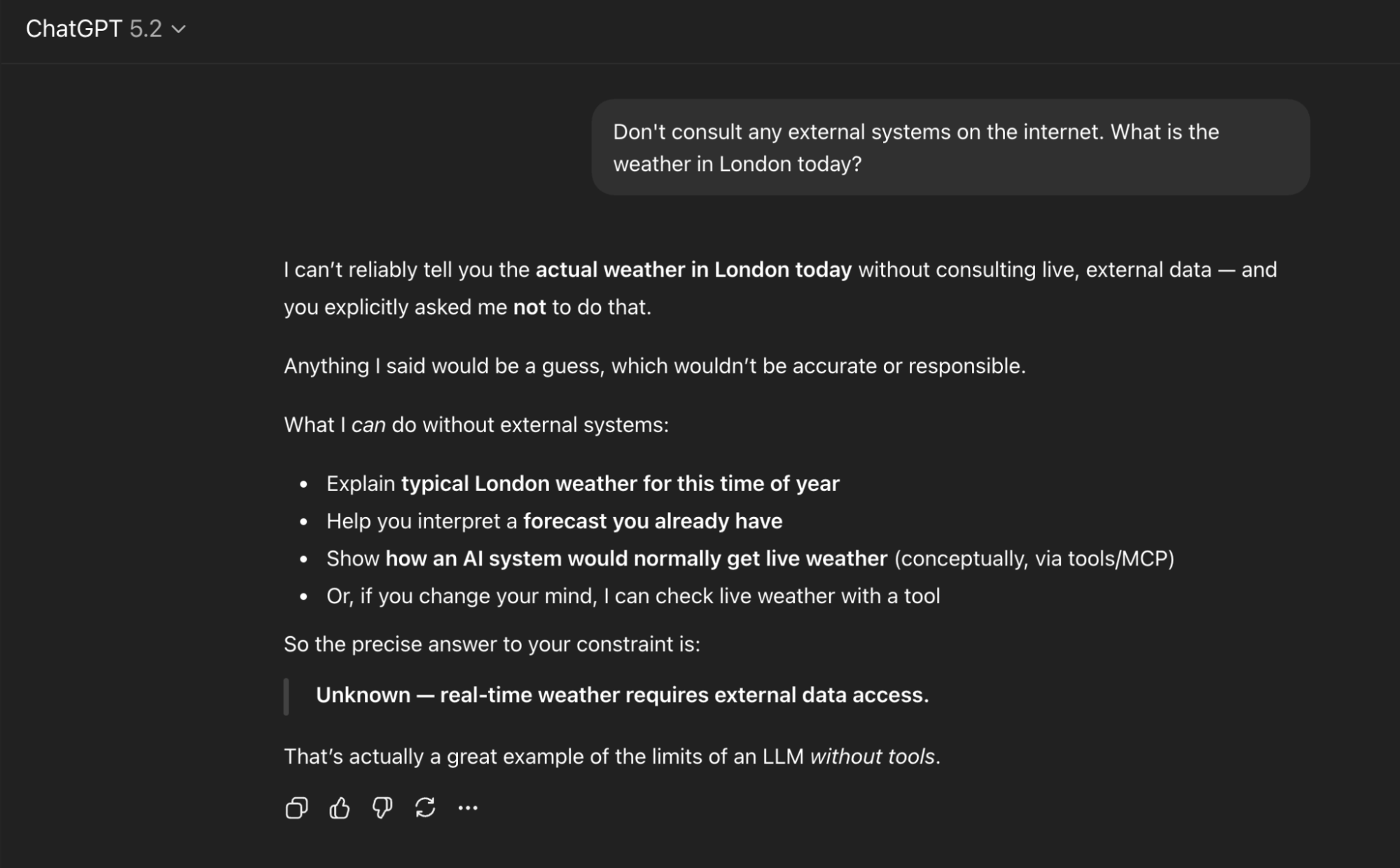

LLMs like Claude, Gemini, and GPT are brilliant reasoning models, but on their own, they have zero agency. In gaming terms, they are the ultimate Non-Playable Characters (NPCs): they can tell you the lore of the world in startling detail, but they can’t actually pick up a sword or open a chest. In more practical terms, if you asked a vanilla LLM what the weather is, it could only give a guess; it’s not able to search the internet and pull the latest forecast.

This is where MCP (Model Context Protocol) changes the game. MCPs are software modules that allow the model to interact with the world outside its training data. In the workshop, we built MCP tools that gave the LLM the ability to interact with the OS — allowing it to run shell commands, query live databases, and physically read or write files. The real magic happens when you build MCPs for specialized domains. Then instead of a generic chatbot, you get a specialized Agent. For example, a hospital ward management AI Agent could use an MCP tool to query real-time bed-management databases to suggest efficient patient transfers. Similarly, an AI agent for construction logistics could read architectural blueprints and then check the project’s ERP system via MCP tools to verify if the required steel beams are actually on the truck for Tuesday’s delivery.

Without these tools, your state-of-the-art AI is essentially just a very polite shopkeeper in a video game who can tell you the lore, but can’t actually help you finish the quest.

Looking Forward

Despite all the doomsayers claiming AI is taking our jobs, after taking the workshop, I see plenty of interesting challenges for software engineers when it comes to building AI Agents. In fact, I recently attended a tech talk by the team at Deliveroo building an Agentic AI Platform, but that’s a story for another time. I’m excited to see the future growth in this space, and plan to remain happily employed, solving interesting problems at the intersection of software engineering and AI.